Bringing AI into healthcare settings is daunting both for patients and healthcare professionals, including administrators and those on the ground in hospitals every day. How can predictive models be trusted? Are they really safe to use, and do they comply with the Health Insurance Portability and Accountability Act 1996 (HIPAA)? How exactly does sensitive data optimize these models, and to whose benefit? For better or worse, AI often stokes fear. Life-and-death settings like healthcare amplify this fear. To cope with the rising tide of machine learning technologies, many healthcare systems have begun implementing a new C-suite position: Chief of AI.

Perhaps most critically, the term “AI” itself is now so nebulous as to be more counterproductive than helpful in business settings. Generative AI and large-language models offer something quite different from models that use patient data to increase predictive power. Lone Star Communications, Inc. endeavors to demystify this buzzword for the healthcare industry and reassure healthcare practitioners that patient confidentiality matters most. Just as with justifiable cybersecurity concerns, there’s no reason why healthcare delivery organization (HDO) leaders shouldn’t feel reassured about the responsible use of AI.

When healthcare technology providers promise solutions that maintain or augment patient care, it’s important to accurately identify expected outcomes before embarking on expensive investments and financial decisions. These decisions have major impacts on long-term business health and growth; they often directly influence the quality of care provision as well.

To this end, HDO leaders should ask challenging questions of technology companies offering solutions based on predictive algorithms. These solutions can reduce staff burnout and help maintain high standards of care, but it’s important to understand how they work.

Key questions should include:

- How Does the AI Model Use Patient Data To Optimize?

- Who Owns the Data?

- What Are the Built-In Guardrails and Parameters?

- How Are the Data Biased?

- How Will Clinicians Know if AI Bots Are Watching and Collecting Data?

How Does the AI Model Use Patient Data To Optimize?

Forward-thinking healthcare technology service providers use cloud services to train their data models. They do this because post-COVID, every major cloud provider — including Amazon Web Services (AWS), Microsoft Azure and Google Cloud Platform (GCP) — must remain HIPAA-compliant. When the world went online during lockdown, HIPAA compliance became a top priority. Healthcare providers that gather and store sensitive patient data benefit from existing cloud infrastructure but what ultimately matters most is whether users of the data follow compliance rules.

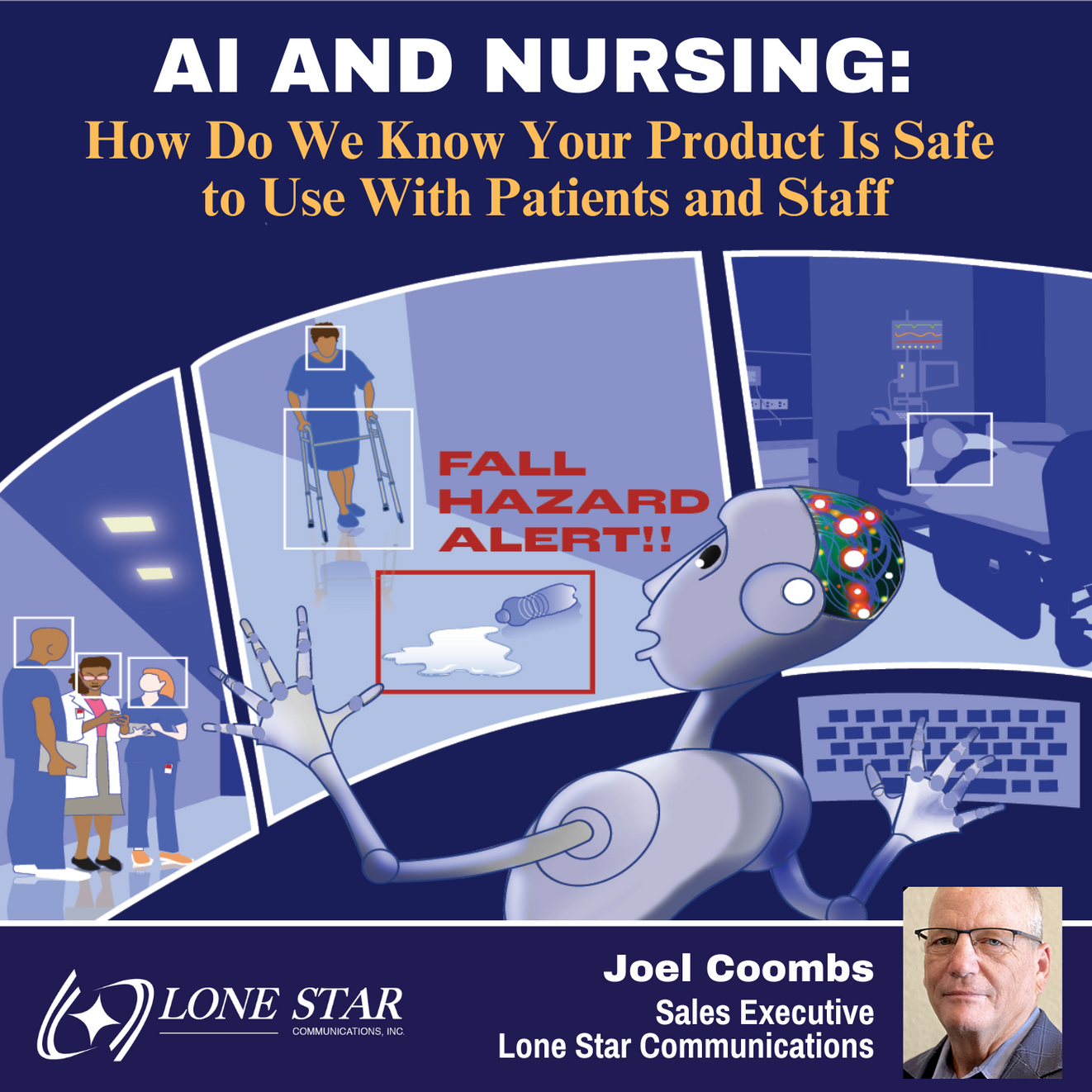

An effective predictive model for HDOs maximizes pose detection trained on numerous models. Often, much less data is required to train such a model and increase detection accuracy — via a process called transfer learning — than might initially be assumed. For this model to help prevent patient falls, dozens of images of patients getting in and out of beds are enough as long as they feature a variety of rooms, objects and lighting levels.

To train a model in no more than two to three weeks, administrators should use data that represents many different patients, staff members and healthcare workers — with extra care taken to identify edge cases, such as photos of suited executives that might be misidentified as family members.

Following this process, healthcare professionals and technology providers can work together to choose the best data. Critically, crossover between hospital data is unnecessary, ensuring HIPAA compliance across the board. As soon as the AI model uses a data set, administrators should delete it to protect patient privacy. So long as patient privacy is front and center of any predictive model usage, there’s far more gain than downside or risk.

Who Owns the Data?

HDOs frequently need reassurance that AI pilot programs that use confidential data are successful — and that the data has been deleted after integration into models. Organizations that don’t opt into a federated learning model that pulls data from a variety of systems (for more standardized outcomes across disparate locations) should choose technology providers that provide the confidence they need.

In a non-federated learning model, users own the data they use to train pilots. For additional and foundational data owned by service providers, software maintenance agreements may enable continued use of that data beyond the pilot.

What Are the Built-In Guardrails and Parameters?

Machine learning systems need guardrails. Without clear parameters, AI models are unfit for deployment, presenting an unacceptable and potentially catastrophic level of risk. Given the tendency to anthropomorphize algorithms and machine learning as autonomous processes, this consideration is even more paramount with AI.

During the early days of collaboration between healthcare organizations and technology providers, understanding the algorithms themselves can address most privacy concerns. Additionally, those who build foundational models are keenly aware of the need for specific parameters. How Are the Data Biased?

All humans naturally tend toward some level of bias, so they naturally (and inadvertently) introduce it into the AI models they train. Therefore, those working with predictive models should strive to eliminate as much bias as possible.

Training on top of the models owned by the tech giants can minimize the innate bias of these models, which — if not focused on during the training process — could compromise accuracy. Of crucial importance, though, is to gain an understanding of and recognize how data are biased.

Additionally, responsible technology providers should be as upfront as possible with healthcare organizations about the reality of bias and how they manage it. Any service provider that promises zero bias in its models is usually unsuitable for healthcare organizations.

How Will Clinicians Know if AI Bots Are Watching and Collecting Data?

There’s no question that hospitals need to collect data for more accurate predictive outcomes and better patient care. Building rigorous monitoring, plans and workflows into AI systems can be the difference between life and death.

HDOs need to ensure that predictive model-based technologies contain failsafe mechanisms. In the case of a “code blue” failure, due to complex integrations that may not work as planned, nurse call system lights can act as backups. With this kind of failsafe, clinicians can react only to critical situations rather than all of them.

If a clinician wants to check whether AI-powered hardware operates as expected in a manual way, they can use something as low-tech as a hand signal to prompt communication back from the device. Hospitals can also leverage already-deployed apps and systems to assess the status of newer AI systems in real time. Most importantly, these new systems supplement and elevate healthcare providers’ capabilities for all patients.

Final Thoughts

AI and predictive modeling only learn effectively and help deliver desired outcomes when real people partner with each other.

Using big data for these outcomes means meeting HIPAA and FDA requirements that are easier to meet thanks to powerful, transparent and accessible databases. Healthcare technology providers can also demonstrate to customers what sensitive data look like via these same databases, showing their true potential when part of a federated model.

It’s tempting for healthcare organizations to attempt to build and manage sensitive data themselves to guarantee compliance and security. But the best healthcare technology providers offer something more: research-based, data-protective services that utilize the best AI tools can give — something that is, by definition, improving all the time.

About

Joel Coombs is a seasoned Sales Executive at Lone Star Communications, leading the charge in the Advanced Technology and Professional Sales Division. With an impressive track record spanning over 30 years, Joel brings a wealth of experience in sales and marketing leadership, particularly within the Healthcare and Public Sector industries.

Throughout his career, Joel has cultivated strong relationships with manufacturers, systems integrators, and channel partners, specializing in advanced technologies tailored for mission-critical applications. His deep understanding of client needs coupled with his strategic insights has consistently propelled him to deliver exceptional results.